In this article we want to define the term regression and give valuable information for beginners.

Regression and Prediction are powerful tools used in Predictive analysis. Linear regression is useful in determining linear relationship between a target and one or multiple predictors. This is one of a statistical processes used in estimating relationships among different variables. Regression analysis includes techniques which are used in modeling or analyzing the variables affecting the target outcome(Chatterjee and Hadi, 2015). But the main focus is determining the relationship between one dependent variable (outcome) and one or multiple independent variables. The independent variables are called predictors. Regression analysis shows how a value of a dependent variable changes in response to change in one or more of independent variables change, while other independent variables are kept constant.

A regression model expresses the relationships of the following in a mathematical expression(Breiman, 2017):

- Unknown parameters expressed as which can be a vector or a scalar quantity

- Independent variable X

- Dependent variable Y

The regression model is expressed as

Y=f(X,)

It is necessary to specify the form of function f, which expresses the relationship between X and Y, which is not derived from the data. If this is not known a convenient form can be assumed. In case of simple linear regression, the dependent variable is a linear combination of the parameters and the plot would yield a straight line relationship. In multiple linear regressions, there are many independent variables and functions of these independent variables(Lewis-Beck, 2015).

With regression analysis, we can estimate the conditional expectation of a dependent variable when the independent variables are held constant. This estimated value is the average value of given dependent variable with the condition that the independent variables are unchanged(Lewis-Beck, 2015). A function called regression function is derived which is the function of independent variable, representing the characteristic behavior of depending on changes in the independent variable. It is also useful to characterize variation of a dependent variable based on prediction of regression function with a probability distribution.

Application of Regression Analysis

One of the most popular applications of regression analysis is for prediction and forecasting applied in the field of the machine learning. The relationships derived by regression analysis can be used for understanding which of independent variables are affected by which of dependent variables and to explore such relationships(Shyu et.al, 2017). Regression analysis is also used in some cases for establishing causal relationships between the dependent variables and the independent variables. But since “causation is not correlation”, such relationships derived may be misleading. Unless, the relationship between specific dependent and independent variables is not established by physical laws, such relationship merely based on data can be a false relationship.

Interpolation and Extrapolation

It is possible to predict value of a variable Y, given the values of X, with the help of regression models. If the prediction is made within the values of data set on which the model is developed, then it is called interpolation The accuracy of prediction in case of extrapolation is based in the underlying regression assumptions. If the extrapolation is made for far higher values in the data set, the model fails to perform because of the difference between sample data and assumptions.

Techniques of Regression Analysis

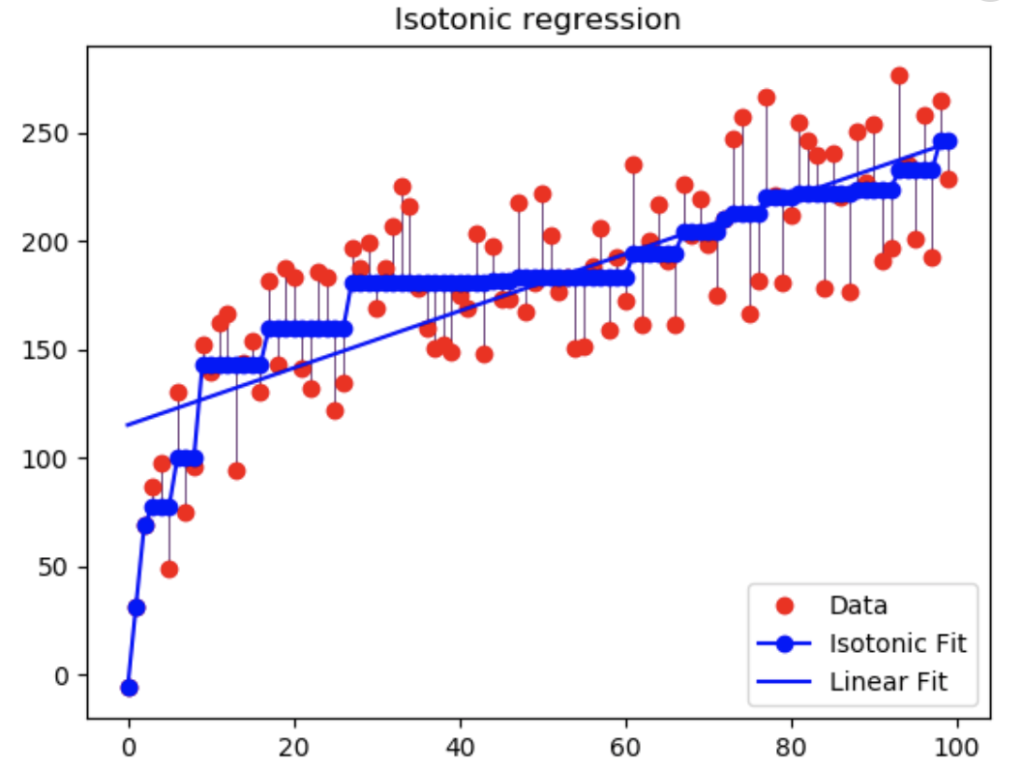

There are many techniques developed for performing regression analysis. The methods of linear regression and Ordinary Least Square regression are called parametric methods. The name parametric indicates that the regression function is expressed in terms of a number of unknown parameters (variables, representing effects of causative e specified factors) which are derived from the data set. In case of nonparametric regression, the regression function lies in given set of the functions(Shyu et.al, 2017).

The accuracy of regression analysis outcome depends on the nature of data gathering and its relationship with the regression technique employed. Usually, when the data set is given, the actual form of data gathering process is not known or is not taken into account in the regression analysis and thus, regression analysis makes some assumptions regarding this process. When there is a moderate violation regarding these assumptions is present, the regression models can still yield acceptable results, though these models may not be performing optimally(Chatterjee and Hadi, 2015). But if there are small dependence between dependent variables and independent variables or the relationships assume causality simply based on the observed data, then the regression models produce misleading results.

Algorithms in Linear regression models

Algorithm is a logical flowchart, usually used by computer programs for solving a problem. This is used to produce results for different data sets. In case of linear regression, the function of algorithms is to develop the models based on the data set and use them for predicting outcome for different independent variable.

Simple Linear Regression model enables the user to study the relationships between two variables. In case of simple linear regression there is only one input variable. If there are multiple input variables, then this is known as multiple linear regression. The algorithm is used in prediction of financial portfolio, real estate prediction, salary forecasting and traffic Estimated Time of Arrival(Chatterjee and Hadi, 2015).

Another commonly used regression model in industry is Logistic regression. This is used when the dependent variable is in the form of a binary input. This means that the given dependent variable takes only binary input such as Yes or No, Default or No Default etc. But the independent variables can take quantities in numerical variables. The output forecast of logistic regression is also binary(Breiman, 2017). For example, a credit card company develops a model to decide whether a given customer can be issued a credit card or not, it uses the model based on the data set. The algorithm is based on Maximum Likelihood Estimation; it means the algorithm determines the coefficient of regression for the model that predicts probability of the outcome in this case “defaults” or “not defaults”. The algorithm runs till it convergence criteria is satisfied or till maximum iterations are performed.

References

Breiman, L. (2017). Classification and regression trees. Routledge.

Chatterjee, S., & Hadi, A. S. (2015). Regression analysis by example. John Wiley & Sons.

Lewis-Beck, C., & Lewis-Beck, M. (2015). Applied regression: An introduction(Vol. 22). Sage publications.

Shyu, W. M., Grosse, E., & Cleveland, W. S. (2017). Local regression models. In Statistical models in S(pp. 309-376). Routledge.

Recent Comments